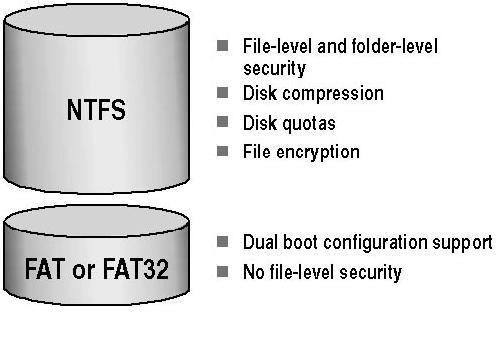

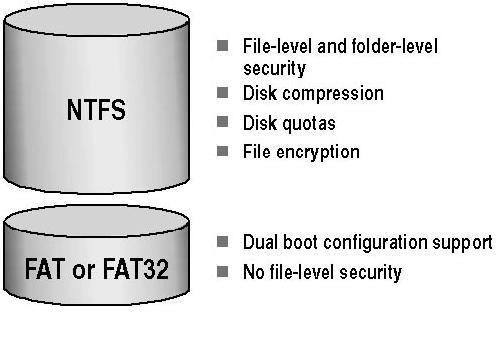

Difference between FAT 32 & NTFS

FAT (FAT16 and FAT32) and NTFS are two methods for storing data on a hard drive. The hard drive has to either be formatted using one or the other, or can …

Faculty: Science 2019 Sample Papers with Solutions Sr. No. Paper Name Question Paper Link Solution Link 1 Immunology, Virology and Pathogenesis Click Here Click Here 2. Cell Biology Click Here Click …

Faculty: Science 2019 Sample Papers with Solutions Sr. No. Paper Name Question Paper Link Solution Link 1 Plant Biotechnology Click Here Click Here 2. Genetic Engineering Click Here Click Here

Faculty: IT 2019 Sample Papers with Solutions Sr. No. Paper Name Question Paper Link Solution Link 1. Cloud Computing Click Here Click Here 2. Analysis & Design of Algorithm Click Here …

Faculty: IT 2019 Sample Papers with Solutions Sr. No. Paper Name Question Paper Link Solution Link 1. Java Technologies Click Here Click Here 2. Web Technologies Click Here Click Here 3. …

Faculty: IT 2019 Sample Papers with Solutions Sr. No. Paper Name Question Paper Link Solution Link 1. Discrete Mathematics Click Here Click Here 2. Programming in C & C++ Click Here …

Faculty: Science 2019 Sample Papers with Solutions Sr. No. Paper Name Question Paper Link Solution Link 1 Immunology, Virology and Pathogenesis Click Here Click Here 2. Cell Biology Click Here Click …

Faculty: Science 2019 Sample Papers with Solutions Sr. No. Paper Name Question Paper Link Solution Link 1 Plant Biotechnology Click Here Click Here 2. Genetic Engineering Click Here Click Here

Faculty: IT 2019 Sample Papers with Solutions Sr. No. Paper Name Question Paper Link Solution Link 1. Cloud Computing Click Here Click Here 2. Analysis & Design of Algorithm Click Here …

Faculty: IT 2019 Sample Papers with Solutions Sr. No. Paper Name Question Paper Link Solution Link 1. Java Technologies Click Here Click Here 2. Web Technologies Click Here Click Here 3. …

FAT (FAT16 and FAT32) and NTFS are two methods for storing data on a hard drive. The hard drive has to either be formatted using one or the other, or can …

FAT (FAT16 and FAT32) and NTFS are two methods for storing data on a hard drive. The hard drive has to either be formatted using one or the other, or can be converted from one to the other (usually FAT to NTFS) using a system tool. FAT32 and NTFS are file systems i.e., a set of logical constructs that an operating system can use to track manage files on a disk volume. Storage hardware cannot be used without a file system, but not all file systems are universally supported by all operating systems.

All operating systems support FAT32 because it is a simple file system and has been around for a really long time. NTFS is more robust and effective than FAT since it makes use of advanced data structures to improve reliability, disk space utilization and overall performance.

Comparison chart

Simulation is the process of creating an abstract representation (a model) to represent important aspects of the real world. Just as flight simulators have long been used to help expose pilots …

Simulation is the process of creating an abstract representation (a model) to represent important aspects of the real world. Just as flight simulators have long been used to help expose pilots and designers to both routine and unexpected circumstances, simulation models can help you explore the behavior of your system under specified situations. Your simulation model can be used to explore changes and alternatives in a low risk environment. A computer simulation is a simulation, run on a single computer, or a network of computers, to reproduce behavior of a system. The simulation uses an abstract model (a computer model, or a computational model) to simulate the system. imulation software is based on the process of modeling a real phenomenon with a set of mathematical formulas. It is, essentially, a program that allows the user to observe an operation through simulation without actually performing that operation. Simulation software is used widely to design equipment so that the final product will be as close to design specs as possible without expensive in process modification.

Computer simulation in practical contexts

Computer simulations are used in a wide variety of practical contexts, such as:

• analysis of air pollutant dispersion using atmospheric dispersion modeling

• design of complex systems such as aircraft and also logistics systems.

• design of noise barriers to effect roadway noise mitigation

• modeling of application performance[10]

• flight simulators to train pilots

• weather forecasting

• forecasting of risk

• simulation of other computers is emulation.

• forecasting of prices on financial markets (for example Adaptive Modeler)

• behavior of structures (such as buildings and industrial parts) under stress and other conditions

• design of industrial processes, such as chemical processing plants

• strategic management and organizational studies

• reservoir simulation for the petroleum engineering to model the subsurface reservoir

• process engineering simulation tools.

If you’re on the hunt for a new workstation PC or gaming laptop, one of the most important factors to take into consideration is the type of processor. The two most …

If you’re on the hunt for a new workstation PC or gaming laptop, one of the most important factors to take into consideration is the type of processor. The two most common processors used in new models are the Intel Core i5 and Intel Core i7. It may not be clear which processor is right for your needs, especially if you want a general better experience.

Key Differences Between Intel Core i5 and Intel Core i7

Core i7 systems are more expensive than Core i5 systems, usually by several hundred dollars. Core i7 processors have more capabilities than Core i5 CPUs. They are better for multitasking, multimedia tasks, high-end gaming and scientific work. You’ll also find that Core i7-equipped PCs are aimed toward people who want faster systems.

So what are some of the advantages that you can expect to see with the Core i7?

• Faster performance

• Larger cache

• Higher clock speeds

• Hyper-Threading technology

• Integrated graphics

Should You Spend the Money on an Intel Core i7?

First understand how you use your workstation PC. For instance, Hyper-Threading technology (HT) can help a lot with workstation and consumer CPU workloads. Sometimes it doesn’t, but it almost never hurts. If you’re someone who is using your workstation computer for multimedia tasks or running CAD software, then you will benefit from a higher-end processor.

If you use your computer primarily for gaming, HT isn’t necessary. It doesn’t offer a performance boost. That’s not to say that HT will hurt your gaming experience, but don’t expect it to add much either. And while games are getting more CPU heavy, the i5 processors continue to handle them just fine. So if you’re a gamer – even a serious one – a Core i5-equipped system will meet your expectations.

Conclusion

In the end, Intel Core i5 is a great processor that is made for mainstream users who care about performance, speed and graphics. The Core i5 is suitable for most tasks, even heavy gaming. The Intel Core i7 is an even better processor that is made for enthusiasts and high-end users. If you spend your days using CAD software or editing and calculating spreadsheets, the Core i7 will help you accomplish tasks faster.

Customer data come into your organization from every touch point, both physical and virtual—numbers, names, order histories, website preferences. These data are the lifeblood of most organizations today. Like real blood, …

Customer data come into your organization from every touch point, both physical and virtual—numbers, names, order histories, website preferences. These data are the lifeblood of most organizations today. Like real blood, however, data can become contaminated; when that happens, your organization suffers.

Dirty Data Are Pandemic

Inaccurate customer data probably already exist in your system. Experts across industry estimate that inaccurate data affect as many as 22 to 35 percent of businesses in the United States today. Think about the credit card industry. How many times have you received multiple credit card offers from the same company? Do you already have a card with that company? Another example is when a customer calls an organization for support on a product or service. How many times must the customer tell the same story? How many times does the customer service representative on the other end of the line recognize the problem, know what’s been done and what hasn’t, and take the next step without making the customer repeat any of the previous steps?

The problem with these two scenarios is that the customer data are inaccurate in some way. That could mean that they’ve been duplicated across departments that have soloed information that they don’t share. Or maybe the data are incomplete, wrong, or mislabeled. Syntax errors and even typos are common problems with “dirty data.”

Are you’re Data good or Bad?

The problem with bad data is that they can go unnoticed for so long that the damage is done before an organization even realizes that the error has affected sales or customer confidence. Fortunately, one question will help you determine whether you have good or bad data: Do you have data? If you do, then you have bad data. It happens when sales teams key in orders, when a new application is implemented, or when form fields are mislabeled. Bad data happen, and when they do, they can obscure your 360-degree view of your customer.

What you see instead is a fragmented story from which pieces missing. They may even be the wrong pieces altogether. The result can lead to poor customer experiences, which in turn lead to loss of loyalty and confidence. So, yes: Bad data can destroy your customer relationships.

Cleanliness Is Next to Good Customer Relationships

Many organization still use manual cleansing processes to strip out syntax errors, typos, and record fragments in the data they collect, but this is an expensive, time-consuming way to do it. Fortunately, a variety of tools is available to help organizations clean their data and create a holistic view of the customer across the organization. These tools can help organizations build more consistent data sets across all systems, which helps them better understand their customers. Clean, consistent data also make sales teams and customer service representatives more responsive, which means that the whole organization is more agile, and its customer relationships have more depth and meaning.

Don’t misunderstand: Cleaning your data won’t be easy, but it will be worth it. The alternative is a fragmented view of your customer that leads to poor customer experiences, lost sales, and lower profits.

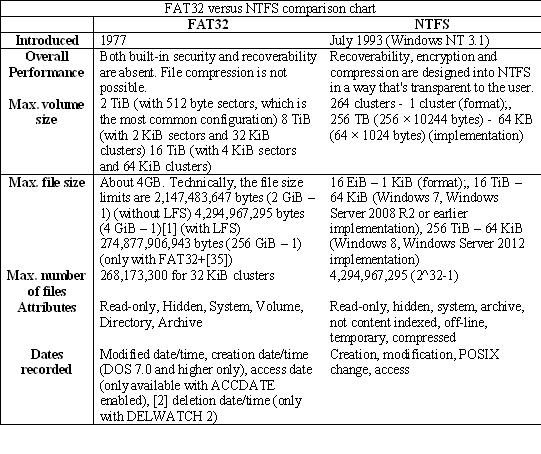

I’m tired of being limited to just DBA_OBJECTS as a test source for exploring a “filtered hierarchy query”. I found out how to generate unique names for each node. Now I …

I’m tired of being limited to just DBA_OBJECTS as a test source for exploring a “filtered hierarchy query”. I found out how to generate unique names for each node. Now I just need to link them up…

The real struggle has been: where to start? I know that’s a vitally important issue based on my earlier experimentation. Vitally important or not, I have to get to the root of the issue..(Snicker; at least until the reality sets in…)

Here’s what I’ve got: the query so far (since I’m using a random number generator, your result sets will almost certainly be different). Here’s what the sub queries do:

1. set the number of records as a parameter.

2. generate the nodes, including the parent ID and name (“T”).

3. chain all the nodes together; this results in lots of records.

4. For each ID, choose the record with the longest chain.

First the SQL, then I’ll discuss the results for two sets of ten (10) records I’m “noodling through”. It doesn’t take a large data set to illustrate the issues.

The last three records look promising: there’s a clear loop-of-3, and one of those is marked as a leaf. So just choose that as the end-point?

No, not quite. The LEAF indicator is unreliable in loops. Look at the third and sixth records. Both are loops-of-1 (with itself), but only one is indicated as a leaf node…

In the first result set (above with the SQL), rows 3 and 5 point to each other. Neither is indicated as a leaf.

I could always “throw out” all the loops, but sometimes that’s a big number of records (not that it’s difficult to generate more). Still I’m wondering how to “break” the loops so they become usable.

Unfortunately, the CSV list of nodes is in different order, so it’s not like it is easy (except visually) to determine that 8,9,10 are together as a loop. Otherwise, I’d just take the maximum number in the loop as the start… or can I? I’d have to parse the numbers… not impossible, but annoying…

Expectations Higher Educational Institutions Institutes of higher education are expected to provide knowledge, know-how, wisdom and character to the students enabling them to understand what they learn in relation to what …

Expectations Higher Educational Institutions

Institutes of higher education are expected to provide knowledge, know-how, wisdom and character to the students enabling them to understand what they learn in relation to what they already know, create ability to generalise from their experiences, take them beyond merely understanding and enabling them to put their knowledge to work, make them capable of deciding their priorities and together synthesize their effect of knowledge to work, make them capable of deciding their priorities and together synthesize their effect of knowledge, know-how and wisdom, coupled with motivation. Character is recognized by important traits, viz., honesty, integrity, initiative, curiosity, truthfulness, co-operativeness, self-esteem and ability to work alone and in a group; however, most of the educational institutions hardly pay any attention to the development of either wisdom or character.

Many educators have not developed wisdom themselves and hence throw up their hands at the thought of imparting it to the students; they think these elements are to be taken care of by someone else. Wisdom and character, the two important human qualities, are best developed by making students participate in creative team activities, wherein they learn to set priorities, to work together, and to develop the social skills required in a society where teamwork is essential to success.

Faculty’s Role

Educational institutes are a system of inter-dependent processes comprising of collection of highly specialized teaching faculty; every faculty is a process manager, facilitates students with opportunities for personal growth and presides over the transformation of inputs to outputs of greater value to the institute and to the ultimate stakeholder. Students enjoy and take pride through learning and accomplishment, hence are active contributors in the process, and are valued for their creativity and intelligence. Teachers work in a system, whereas the Head of an institute works on the system and continuously improves the quality with the help of teachers; students study and learn in a system, and the teachers have to continuously work on the system to improve the teaching quality with the help of students. It takes a quality experience to create an independent learner, teachers must discuss with the students of what constitutes a quality experience for them; yhe objective of quality management is to continuously seek a better way of imparting education to the students. Everyone in the system is expected, invited, and trained to participate in the improvement process, rather than just be dictated from the top administration.

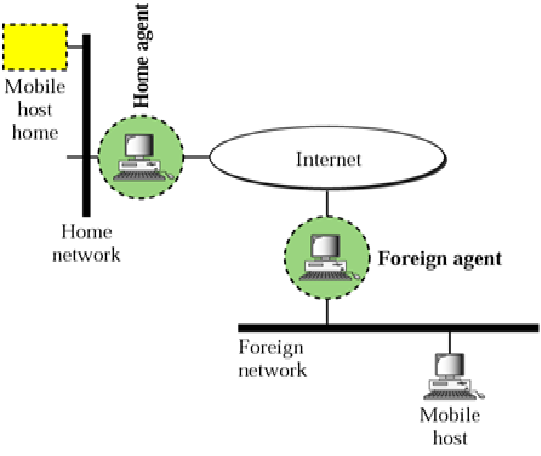

Mobile IP is an Internet Engineering Task Force (IETF) standard communications protocol. In many applications (e.g., VPN, VoIP) sudden changes in network connectivity and IP address can cause problems. Mobile IP …

Mobile IP is an Internet Engineering Task Force (IETF) standard communications protocol. In many applications (e.g., VPN, VoIP) sudden changes in network connectivity and IP address can cause problems. Mobile IP protocols were designed to support seamless and continuous internet connectivity. Mobile IP was developed as a means for transparently dealing with problems of mobile users. It enables hosts to stay connected to the Internet regardless of their location and to be tracked without needing to change their IP address. It requires no modifications to IP addresses or IP address format. It has no geographical limitations.

Mobile IP Entities

• Mobile Node (MN)It is the entity that may change its point of attachment from network to network in the Internet. MN assigned a permanent IP called its home address to which other hosts send packets regardless of MN’s location. Since this IP doesn’t change it can be used by long-lived applications as MN’s location changes.

• Home Agent (HA): This is a router with additional functionality. It is Located on home network of MN. HA does mobility binding of MN’s IP with its COA (care of address). It forwards packets to appropriate network when MN is away.

• Foreign Agent (FA): it is a router with enhanced functionality. If MN is away from HA, it uses an FA to send/receive data to/from HA. FA advertises itself periodically. It forwards MN’s registration request. FA de-encapsulates messages for delivery to MN.

• Care-of-address (COA): It is an address which identifies MN’s current location. This address is sent by FA to HA when MN attaches. It is usually the IP address of the FA.

• Correspondent Node (CN): CN is the end host to which MN is corresponding (e.g. a web server).

Home agent and foreign agent

Mobile IP Operations

o A MN listens for agent advertisement and then initiates registration. If responding agent is the HA, then mobile IP is not necessary.

o After receiving the registration request from a MN, the HA acknowledges and registration is complete. Registration happens as often as MN changes networks.

o HA intercepts all packets destined for MN. This is simple unless sending application is on or near the same network as the MN. HA masquerades as MN. There is also a de-registration process with HA if an MN returns home.

o HA then encapsulates all packets addressed to MN and forwards them to FA

o FA de-encapsulates all packets addressed to MN and forwards them via hardware address (learned as part of registration process)

Enhancements to the Mobile IP standard, such as Mobile IPv6 and Hierarchical Mobile IPv6 (HMIPv6), were developed to advance mobile communications by making the processes involved less cumbersome.

Big data is a buzzword, or catch-phrase, used to describe a massive volume of both structured and unstructured data that is so large it is difficult to process using traditional database …

Big data is a buzzword, or catch-phrase, used to describe a massive volume of both structured and unstructured data that is so large it is difficult to process using traditional database and software techniques. In most enterprise scenarios the volume of data is too big or it moves too fast or it exceeds current processing capacity. Despite these problems, big data has the potential to help companies improve operations and make faster, more intelligent decisions.

An example of big data might be petabytes (1,024 terabytes) or exabytes (1,024 petabytes) of data consisting of billions to trillions of records of millions of people—all from different sources (e.g. Web, sales, customer contact center, social media, mobile data and so on). The data is typically loosely structured data that is often incomplete and inaccessible.

History and Consideration

While the term “big data” is relatively new, the act of gathering and storing large amounts of information for eventual analysis is ages old. The concept gained momentum in the early 2000s when industry analyst Doug Laney articulated the now-mainstream definition of big data as the three Vs:

Volume. Organizations collect data from a variety of sources, including business transactions, social media and information from sensor or machine-to-machine data. In the past, storing it would’ve been a problem – but new technologies (such as Hadoop) have eased the burden.

Velocity. Data streams in at an unprecedented speed and must be dealt with in a timely manner. RFID tags, sensors and smart metering are driving the need to deal with torrents of data in near-real time.

Variety. Data comes in all types of formats – from structured, numeric data in traditional databases to unstructured text documents, email, video, audio, stock ticker data and financial transactions.

Variability. In addition to the increasing velocities and varieties of data, data flows can be highly inconsistent with periodic peaks. Is something trending in social media? Daily, seasonal and event-triggered peak data loads can be challenging to manage. Even more so with unstructured data.

Complexity. Today’s data comes from multiple sources, which makes it difficult to link, match, cleanse and transform data across systems. However, it’s necessary to connect and correlate relationships, hierarchies and multiple data linkages or your data can quickly spiral out of control.

Advantages of Big data:

1) Cost reductions

2) Time reductions

3) New product development and optimized offerings

4) Smart decision making. When you combine big data with high-powered analytics, you can accomplish business-related tasks such as:

Forensic means to use in court. Computer forensics is the collection, preservation, analysis, extraction, documentation and in some cases, the court presentation of computer-related evidence which has either been generated by …

Forensic means to use in court. Computer forensics is the collection, preservation, analysis, extraction, documentation and in some cases, the court presentation of computer-related evidence which has either been generated by a computer or has been stored on computer media.

Traditional Forensic v/s Computer Forensic

The difference between traditional forensics workers and the computer forensics folks is that the traditionalists tend to work in two locations only. The initial crime scene but the majority of the investigation goes on back at the lab. The computer forensics investigators may have to take on their analyses wherever the equipment is located or found rather than just in the pristine environment and the products that are used by investigators come from a market-driven private sector. Traditional forensics investigators are expected to interpret their findings and draw conclusions accordingly whereas computer forensics investigators must only produce direct information and data that will have significance in the case they are investigating.

Component steps for Computer Forensics:

Make a Forensic Image

• Requires Extensive Knowledge of Computer Hardware and Software, Especially File Systems and Operating Systems.

• Requires Special “Forensics” Hardware and Software

• Requires Knowledge of Proper Evidence Handling.

Create Indexes and setup “case”

Access Data Forensic Toolkit (FTK)

• Implements “Hashing” concept.

• Hashing is done using hash functions which is a well-defined procedure or mathematical function for turning some kind of data into a comparatively small integer.

• The values returned by hash function are called hash values

Look for evidence within the image

• View Emails, Documents, Graphics etc.

• Searching for Keywords

• Bookmark relevant material for inclusion into report

• Good investigation skills needed to interview the client to get background material needed to focus the CF investigation.

Generate CF Report

• Usually in HTML format

• Can be printed or on CD-ROM

• Basis for Investigation Report, Deposition, Affidavits, and Testimony.

• CF Report often supplemented with other investigation methods (Online Databases, Email / Phone Interviews)

Forensic Software:

Byte Back, Drive Spy, EnCase , Forensic Tool Kit (FTK), Hash Keeper, Ilook, Maresware, Password Recovery Toolkit (PRTK), Safeback, Thumbs Plus

Pros and cons of Computer Forensics

Pros:

• Searching and analyzing of a mountain of data quickly and efficiently.

• Valuable data which has been deleted and lost by offenders can be retrieved. It may become substantial evidence in court.

• Preservation of evidence.

• Ethical process

Cons:

• Retrieval of data is costly.

• Evidence on the other hand can only be captured once.

• Privacy concerns.

• Data corruption

With computers becoming more and more involved in our everyday lives, both socially and professionally, there is a need for computer forensics. This field will enable crucial electronic evidence to be found, whether it was deleted, lost, hidden, or damaged and used to prosecute individuals that believe they have successfully beaten the system.

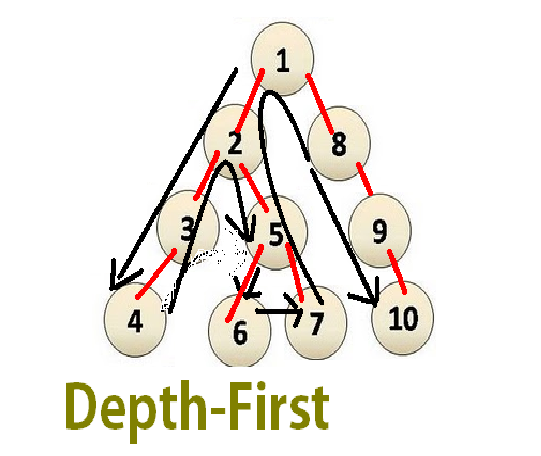

Depth-first search (DFS) is an algorithm for traversing or searching tree or graph data structures. One starts at the root and explores as far as possible along each branch before backtracking. …

Depth-first search (DFS) is an algorithm for traversing or searching tree or graph data structures. One starts at the root and explores as far as possible along each branch before backtracking.

A version of depth-first search was investigated in the 19th century by French mathematician Charles Pierre Trémaux as a strategy for solving mazes.

The order of Excution of nodes are as :1, 2, 3, 4, 5, 6, 7, 8, 9, 10.

Pseudo code:

Input: A graph G and a vertex v of G

Output: All vertices reachable from v labeled as discovered

A recursive implementation of DFS:

1 procedure DFS(G,v):

2 label v as discovered

3 for all edges from v to w in G.adjacentEdges(v) do

4 if vertex w is not labeled as discovered then

5 recursively call DFS(G,w)

A non-recursive implementation of DFS:

1 procedure DFS-iterative(G,v):

2 let S be a stack

3 S.push(v)

4 while S is not empty

5 v = S.pop()

6 if v is not labeled as discovered:

7 label v as discovered

8 for all edges from v to w in G.adjacentEdges(v) do

9 S.push(w)

Applications: There are various applications of DFS.

• Finding connected components.

• Detecting cycle in a graph.

• Finding connected components.

• Finding the bridges of a graph.

• Generating words in order to plot the Limit Set of a Group.

• Finding strongly connected components.

• Planarity testing.

• Solving puzzles with only one solution, such as mazes. (DFS can be adapted to find all solutions to a maze by only including nodes on the current path in the visited set.)

• Maze generation may use a randomized depth-first search.

• Finding biconnectivity in graphs.